Neural Network Model Analysis

The exploration of computational intelligence led us to evaluate a Neural Network model, harnessing its pattern recognition capabilities derived from artificial neurons.

Utilizing a tuning process, the most optimum layers for the network were identified, and SMOTE was employed to address class and feature imbalances.

Model Training and Results

Optimum Layers Configuration:

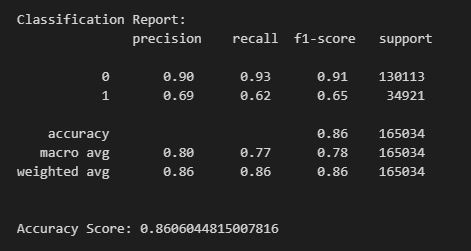

{'activation': 'relu', 'first_units': 26, 'num_layers': 4, 'units_0': 26, 'units_1': 21, 'units_2': 21, 'units_3': 11, 'units_4': 11, 'tuner/epochs': 20, 'tuner/initial_epoch': 7, 'tuner/bracket': 1, 'tuner/round': 1, 'tuner/trial_id': '0018'}The model achieved an accuracy score of approximately 86.06%.

Below is the result of the Confusion Matrix:

Findings

Contrary to initial expectations, the Neural Network model did not outperform the XGBoost model. Factors contributing to this outcome include:

- XGBoost's renowned predictive accuracy, especially in the banking sector

- Its proficiency in handling imbalanced datasets

- The desirable feature interpretability provided by XGBoost, which is especially valued in finance and service industries, contrasted with the "black-box" nature of Neural Networks

SMOTE (Synthetic Minority Over-sampling Technique)

SMOTE is a technique designed to amend class imbalances by generating synthetic samples for the minority class, thereby balancing the class distribution and enhancing representation of the minority class within the dataset.

SMOTE operates by:

- Identifying the Minority Class: Establishing the class with fewer instances in comparison to the majority.

- Finding Nearest Neighbors: For each sample in the minority class, SMOTE finds its k-nearest neighbors within the feature space.

- Creating Synthetic Samples: SMOTE generates synthetic samples through interpolation between the minority class sample and its neighbors. This process forms new samples on the line segments connecting the minority class samples to their selected neighbors within the feature space.